We live in a world where information is free, but that doesn’t mean information is good. Trusted information — about products specifically — has been our raison d’être at Consumer Reports since our founding in 1936.

In the 1930s advertising was exploding for the first time, and there wasn’t much quality information to help Americans distinguish fact from fiction when it came to new products. Technology was rapidly advancing and regulation lagged behind it… not too different than the circumstances we find ourselves in today.

If anything, the hidden influence of advertising in today’s world is even more pernicious. As HouseFresh editors Giselle Navarro and Danny Ashton posit in “How Google is killing independent sites like ours” — the internet is awash with content marketing that is beating the Google search algorithm. More specifically, they argue:

- Many publishers recommend products without testing them — they simply paraphrase marketing and Amazon listing information.

- Big media publishers then inundate the web with subpar product recommendations that shouldn’t be trusted, spraying this poor information across many brands whose reputable URLs outrank incumbents.

- Media companies do this since they need affiliate revenue to offset falling ad revenue.

In short, it has become commonplace across the web for publishers to recommend products without ever investigating whether the products themselves are any good. And people trust this information, since it comes from a reputable brand that wins in Google rankings.

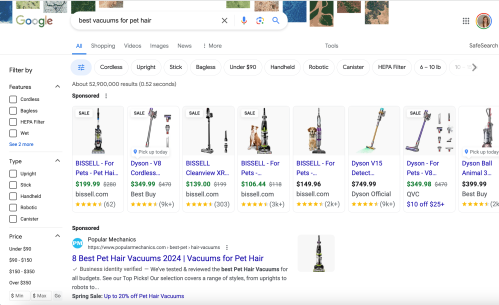

Google Search results “above the fold” show only sponsored content.

These dynamics have been tainting consumer choice and diverting traffic from earnest product review sites like Consumer Reports and HouseFresh for years. But now, generative AI and conversational design patterns are poised to exacerbate consumers’ information issues.

The most widely used large language models like those offered by OpenAI, Microsoft, and others are trained on the whole internet. That means they’ve slurped up a whole bunch of phony product reviews. When these bad reviews have been mirrored on a panoply of sites, their conclusions become more ingrained in the model’s reasoning and responses. Models are likely to return unverified and often incorrect product information because of this.

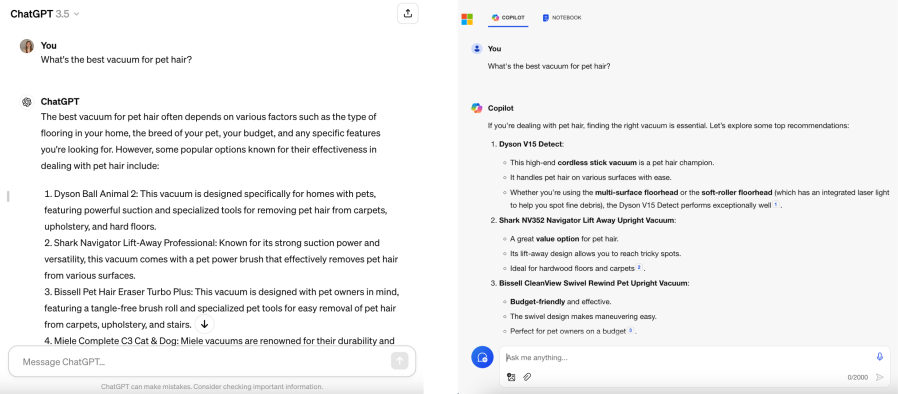

ChatGPT and Bing answer users’ questions directly, limiting the sources they see.

Most consumers who interact with large language models directly do so via conversational experiences built on top of them, like ChatGPT and Copilot. The design of these conversational interactions tends to elevate one answer, “the answer.” And while these experiences may provide links to grounding or reference material to justify their answers, they’re less good at surfacing competing information. Search, the current paradigm for information discovery (though probably not for long), is designed to return a whole page of ranked results; this allows the user to consult multiple sources before drawing a conclusion. As conversational experiences surge in popularity, we fear the days of checking multiple “answers” to a question on the internet could be numbered.

At CR we’re concerned about how generative AI and conversational design will influence consumer choice. We’ve kicked off prototyping and product development aimed at addressing these issues head on, and look forward to sharing more updates soon.